Surgical robot simulation with BBZ console

Introduction

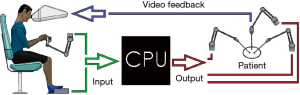

Minimally invasive surgery can be performed with robotic assistance, as an evolution of laparoscopic surgery. Robotic assisted procedures are also more expensive than traditional ones, due to instruments costs and longer intervention time. However, when properly used, the robot can improve the surgeon’s ability. Otherwise, the robotic procedure may put excessive stress on the surgeons and their operating room team, thus increasing the risk for patients. Robots for assisted surgery are complex and expensive systems; consequently they require training devices and scenarios capable of assisting new learners in mastering the use of the robot and improving their skills. This has been the case of similar complex and expensive systems, like aeronautics and nuclear power control. In all these domains, effective procedure-specific technical skills are equally important as a range of non-technical skills, which are often overlooked even if they imply high potential for error. As with technical skills, which are acquired over many years of practice and training, non-technical skills require both training and experience. Currently, the most used robot in abdominal and pelvic surgery is the da Vinci Surgical System (1), by Intuitive Surgical Inc. Its main technical advantages include: three-dimensional visualization, magnification of the operative field, tremor reduction, and a range of motion capable of approximating the human wrist. A particular set of skills is needed to master this device’s human-machine interface since the robot assisted minimally invasive surgical gestures are different from laparoscopic minimally invasive surgery gestures, and even more from open surgery ones. In robot assisted surgery, the surgeon has to learn how to properly control the tele-operated instruments without relying on force feedback in their hands. The robot provides only a visual feedback by using a three-dimensional, immersive and enhanced high-definition vision system located on the master console. A tele-operated surgical system can be considered as a “black box”, where the output results from an input without any knowledge of the internal implementation. The input provided on the handles and footswitches located at the user’s side—usually indicated as “master”—gets replicated at the remote side—indicated as “slave”—via the surgical instruments. Simultaneously, a stereoscopical camera system—the “endoscope”—provides a view of the instruments in action and sends a visual feedback to the user through the aforementioned immersive three-dimensional vision system at the input side of the master console. Figure 1 shows a block diagram of a tele-operated surgical system.

The da Vinci Surgical System also provides other useful functions, such as the EndoWrist manipulation. EndoWrist instruments are designed to provide the surgeon with a natural dexterity and a range of motions far greater than the human hand itself. The instrument’s wrist is directly connected to the forceps and it is a full spherical robotic wrist designed to allow for rotational movement in any direction. Figure 2 shows a range of motion the EndoWrist tools can achieve.

Another useful function is called “clutching”. As the name suggests, it has a similar function to the clutch implemented in cars. This operates by disengaging the engine shaft from the gearbox input shaft. When the clutch pedal of a car is pressed, the engine keeps its momentum but no power flows to the gearbox. In the same way, in the da Vinci Surgical System, the user can engage the clutch and change position of the master’s side manipulators without moving the instruments at the slave’s side, which are inserted inside a patient. Such function is useful because it allows the surgeon to assume a more comfortable position without compromising the procedure itself. The transition between the clutched and unclutched mode is seamless.

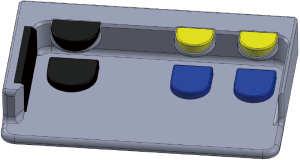

As previously mentioned, the only feedbacks provided to the surgeon consist in a three-dimensional, immersive and enhanced vision. The stereo camera is mounted on a robotic arm similar to those carrying and driving the instruments. During the procedure, the surgeon can control the camera movements by pressing the camera pedal, which engages the clutch for the instruments and switches the control to the camera arm. Its control is similar to how a newspaper is usually handled: in order to read the news in the lower part of the newspaper, both hands move vertically; to read the news in the left part, both hands move to right; to have a better view of small letters, both hands should move closer to the face. The da Vinci system has four arms mounted on a central pillar. One arm is for the camera, while the other three are for the instruments. It goes without saying that it is not possible to control three instruments simultaneously with only two hands. Therefore, the system provides a dedicated pedal allowing the user to switch the control of an instrument over the one mounted on the fourth arm. During the system’s setup, it is required to set which master arm will be able to control the two instruments. This means that the surgeon must plan in advance the instruments and the corresponding hands to be used during the procedure. This pedal is the vertical black pedal put on the left side of the footswitches’ panel. In order to perform tissue dissection some tools are provided with monopolar or bipolar energy. On the middle-right side of the footswitches’ panel there are four pedals: two yellow located on the higher part, and two blue on the lower. The left yellow pedal activates the high level energy of the bipolar tool controlled by the left hand. The left blue pedal, instead, activates the low energy of the bipolar tool, and the monopolar tool controlled by the left hand. The same pattern is replicated pedals located on the right side. Figure 3 shows the footswitches panel of the da Vinci Surgical System.

As the brief overview of the da Vinci System and its features suggests, to fully master such complex system a specific training for its human-machine interface is needed.

Training in robotic assisted surgery

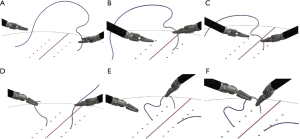

Training in robotic assisted surgery can take place in both real and simulated environments. A real environment involves patients, corpses or animals, while a simulated environment involves dry lab (plastic parts) or virtual reality. Training with real robots is very expensive due to the high initial cost of purchasing and maintaining the robotic surgical system, and it also arises ethical issues of the training takes place in a real environment. For these reasons, virtual reality training systems were developed to help the surgeons improve non-technical skills required to perform robotic assisted surgery. Virtual reality based training systems are composed of hardware and software. Since the most common robot in this field is the da Vinci Surgical System, the hardware is usually designed to reproduce the da Vinci user experience. Consequently, the position of eyes, hands and feet match those on the da Vinci console. For the same reason, the shape and functions of the input devices, handles and footswitches, are copied from the da Vinci master console. The same design rule is applied also to the vision system. To replicate the posture, all the training systems are provided with an armrest. The training system software is a virtual reality that provides different scenarios where the user can perform training tasks with increasing difficulties. These tasks span, for instance, from basic manipulation of rigid objects to advanced manipulation of deformable objects, such as soft, anatomical-like tissues. The core purpose of a simulator is to teach how to properly master the minimally-invasive surgical tools. However, some “fancier” products also try to teach how to perform various steps of surgical procedures. Minimally invasive surgical procedures can be decomposed in a sequence of tasks. Among these, the most common are: tissue dissection and manipulation, monopolar or bipolar energy tools usage for dissection and cauterization, needle grabbing and manipulation, suture thread manipulation, knot tying, stapler usage. In addition to those, other tasks consist in handling the camera control, the clutch function, and third arm swap function. Furthermore, each surgical task can be decomposed in basic gestures. As an example a four-throw suturing task (Figure 4), can be decomposed in few rudimentary surgical gestures, named surgemes (2,3).

Each surgical gesture performed with a robotic assisted technique appears to be similar to a standard laparoscopic procedure, with the difference in the surgeon’s posture, tools dexterity, and force and vision feedback. Therefore, performing a robotic assisted procedure needs specific training. As explained in the introduction, the only feedback provided to the surgeon during a robotic assisted procedure is the 3D vision. This feature is fundamental in the developing of devices for training in robotic surgery as it allows training in virtual reality scenarios to be as effective as real practice on rubber/plastic parts. The effectiveness of the simulation is related to the performances of its perceived rendering, so one important aspect is the physical behavior of the scenarios and elements, for example during collisions. Some surgemes may be simulated with rigid bodies; others involve the interaction between deformable objects. The physical behavior must be realistic in both scenarios. For example, surgeme (A) “reach for needle” involves the forceps and the needle, both of which are rigid bodies. When the tool grabs the needle, this must be blocked between the forceps, but, in order to rotate the needle between the forceps without missing it, the surgeon has to open them a bit and either push/pull the needle with the other tool, or push it on a rigid part of the environment. If deformable objects are involved, as in the case of the surgeme (C) “insert and push the needle through tissue”, the interaction is between the forceps and the needle (rigid to rigid) and between the tip of the needle and the tissue (rigid to deformable). While rigid bodies are quite simple to simulate in virtual reality, replicating deformable objects, like the surgical thread or soft anatomical-like tissues, is quite difficult. For example, a challenging part of the simulation is the computation of the behavior of fluids, like blood, inside anatomical-like tissues. Hardware and software of a virtual reality based training system are important tools for practice, but the educational value of such systems is related to the ability to report errors during training. Since the hardware driving the simulation software is an input device, all hands and feet’s movements are recorded by electronic sensors. This means that the system can provide an objective measurement of a large set of parameters during the task execution. This set of parameters, such as execution time, hand workspace, instruments’ out-of-view time, collisions between instruments, exerted forces of the instruments, are useful metrics that can help both the supervisor and the user to assess their progress while performing tasks. Since all these metrics are objective evaluations, they are not subjected to user’s impressions on the correctness, and they may be stored in a database as reference. Each user can access to their saved metrics in order to compare training history. Additionally, these metrics may be also compared to the ones of expert users thus providing an important reference for trainees and supervisors.

BBZ console for training

Actaeon is the console for training in robot assisted surgery developed by BBZ (4), a spin-off company of the University of Verona (Italy). The device simulates the da Vinci master console’s features and integrates BBZ software. The hardware is very compact and neat, it provides all the required features of the da Vinci console. It is equipped with two (left and right) high dexterity input devices with finger-clutch switches, one seven pedals footswitches panel and one immersive three dimensional HD video system with user’s presence sensor. The functionality of this compact design is proven by the example of tele-operation of the da Vinci instruments made with BBZ’s master console (Figure 5). The system has a total setup time of less than 5 minutes, and weights less than 10 kg. Its small footprint allows storing on common bookshelves, and it can be carried in a standard cabin luggage (55 cm × 40 cm × 20 cm). (Figure 6). Easy transport and fast setup simplify logistics and allow for new approaches to robotic surgery training. In fact, these allow setting up classrooms where users can work on several consoles under the direct supervision of a single expert surgeon and, at the same time, to perform remote training directly at surgeon’s home. The software allows users to acquire all the required skills to effectively use the surgical robot. It proposes more than thirty different training tasks which covers all the different functionalities of the robot: from proper control of endoscope and clutch, to third arm use and from energy tools to needle and thread handling (Figure 7). All the training tasks take advantage of the extreme realism of the physics simulation which allows increasing the effectiveness of training and reducing training time. The realism of the simulation also allows collecting accurate metrics of task execution which, in turn, provides a realistic measure of user’s performance and his/her proficiency. The collected metrics measure, among others, execution time, economy of motion, motion smoothness, user’s hands workspace, and tool out of view time. Figure 8 shows and example of score report after task execution.

Conclusions

This paper presents a new console for training in robot assisted surgery, the hardware provides all the required features of the da Vinci console, and the software allows users to acquire all the required skills to effectively use the surgical robot. Easy transport and fast setup simplifies logistics and allows for new approaches to robotic surgery training. In fact, with the presented system it is possible to set up classrooms where users can work on several consoles under the supervision of a single expert surgeon or to remotely deliver training directly at surgeon’s home. All users’ metrics are collected in a database and may be compared with the ones previously collected or with the reference measurements taken from expert users. Since all the measurements are taken objectively from the training system, this would help in the standardization of the evaluation method and also will help the novices to improve their skills before approaching robot assisted surgery.

Acknowledgements

None.

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

References

- Intuitive Surgical, Inc. 1020 Kifer Road Sunnyvale, CA 94086-5304 USA. Available online: http://www.intuitivesurgical.com/

- Haro BB, Zappella L, Vidal R. Surgical gesture classification from video data. Med Image Comput Comput Assist Interv 2012;15:34-41. [PubMed]

- Lin HC, Shafran I, Yuh D, et al. Towards automatic skill evaluation: detection and segmentation of robot-assisted surgical motions. Comput Aided Surg 2006;11:220-30. [Crossref] [PubMed]

- BBZ srl, via Dante Alighieri 27, 37068 Vigasio (Verona), Italy. Available online: www.bbzsrl.com

- Bovo F, De Rossi G, Visentin F. Surgical robot teleoperation with BBZ console. Asvide 2017;4:149. Available online: http://www.asvide.com/articles/1461

- Bovo F, De Rossi G, Visentin F. BBZ training system setup. Asvide 2017;4:150. Available online: http://www.asvide.com/articles/1462

- Bovo F, De Rossi G, Visentin F. Example of training to monopolar tool use. Asvide 2017;4:151. Available online: http://www.asvide.com/articles/1463

Cite this article as: Bovo F, De Rossi G, Visentin F. Surgical robot simulation with BBZ console. J Vis Surg 2017;3:57.