Virtual reality, mixed reality and augmented reality in surgical planning for video or robotically assisted thoracoscopic anatomic resections for treatment of lung cancer

Introduction

Unlike 2D interfaces, immersive 3D interfaces such as virtual reality (VR), mixed reality (MR) and augmented reality (AR) generate models that allow visualizing, manipulating and interacting with the object produced by handling digital information. This interface enables multidimensional information using classes of display on the reality-virtuality continuum. This technology is currently expensive and is not available in the majority of the hospitals. We participate in the development of a platform composed by mobile (M3DMIX) and desktop (M3DESK) apps that allows seeing, manipulate, rotate or even print anatomic models of the different structures of the chest (chest wall, lung, tracheobronchial tree, heart and great vessels, pulmonary vessels and the tumor together or in combination, or isolated, one by one) in full 3D dynamic reproduction.

In this report, we evaluate VR, MR and AR as an exploiting tool before the surgical procedure for improving decision making and surgical planning in a challenging case of video-assisted thoracoscopic surgery (VATS) lobectomy.

Methods

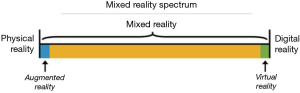

The concept of a “virtuality continuum” (1) relates to the mixture of classes of objects presented in any particular display situation as illustrated in Figure 1, where the real environment is shown at one end of the continuum, and virtual environment is at the opposite extreme.

At extreme left of the continuum are real objects that can be observed directly, the natural world, where nothing is computer generated. On the other side, at extreme continuum right, are virtual objects that need computers to be observed, where everything is computer generated. Everything between these extremes is in MR spectrum.

VR brings a completely digital environment, which may provide the greatest level of immersion to the user. The virtual world may simulate the properties of the real-world environments or exceed the limits of reality. Users remain disconnected from real world.

AR brings aspects of the virtual world into the real world; it is closer to the real environment than the virtual environment. Users remain in the real world while experiencing a digital layer of information or graphics overlaid on the real-world scene. The digital content is not strongly connected to the real world.

Thinking MR as an independent concept, a position in the middle of “virtuality continuum”, there is a perfectly blend between the real world and the digital world. Both worlds are strongly connected, becoming possible physical and digital objects coexist and interact in real time with the users (2).

The intraoperative application of VR, MR and AR offers exciting possibilities, such as an accurate performance of the surgeon during the procedures as well as facilitates preoperative planning and surgical simulation and training (Figure 2).

M3DESK and M3DMIX are applications designed to create and view 3D models using VR, MR and AR to facilitate planning and execution of the procedure as well as providing better communication between doctors and exchange information among health care professionals and even patients. This platform allows quick and comprehensive manipulation of preoperative contrast-enhanced CT scan images to create 3D models of internal patient structures.

The scan was performed in a General Electric (GE Healthcare, Waukesha, WI, USA) LightSpeed VCT scanner, using a technique of 120 kVp, 400 mA, 0.4 s rotation time, 1.375 helical pitch and 1.25 mm slice thickness. Images were reconstructed using standard convolution kernel.

CT images were exported in DICOM format and upload into M3DESK software (P3DMED, Porto Alegre, RS, Brazil) for segmentation and 3D modeling. Chest wall, lungs, heart, vessels, tracheobronchial tree and tumor were automatically segmented using thresholding and region-growing methods. Patient’s 3D model was generated from meshes, created using Marching Cubes algorithm (4), of each segmented structure, the model layers. For each model layer, color and transparency were assigned to facilitate user manipulation and visualization of the patient’s 3D model (Figure 3).

The software allows export patient’s 3D model to the cloud or 3D printer. Models exported to the cloud are viewed in mobile device using M3DMIX app at continuum chosen point, creating a VR, MR or AR experience. To allow MR or AR experience a TAG holder (QR Code TAG) was added to 3D model in the M3DESK environment. Heart, vessels and tumor layers were exported in STL format for 3D printing, as well the TAG holder.

The model was printed using a fused deposition modeling printer from Stratasys, FDM 200 MC, in ABS plastic material with 0.254 mm of layer thickness.

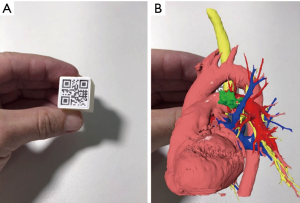

In the M3DMIX app, running on a mobile device, patient’s 3D model was downloaded from the cloud and could be seen at three points in the “virtuality continuum”. At VR point, model is available without any TAG and could be changed, moved, rotated, enlarged and have dynamic layers proprieties as color and transparency that could be continuously added and withdrawn. For viewing the model at AR, the TAG was printed in a paper and attached to a cube (“magic cube”) that can even be sterilized for intraoperative use. Through the TAG tracking, the mobile app was able to put the model in the same cube position and orientation. This kind of approach allows the user to have a haptic experience with the patient’s model.

Another experience was to attach TAG to the holder printed and previously positioned in the desktop software. Pointing the mobile device to the printed model allows the user to superimpose different layers on it. printed model and virtual model were strongly connected, allowing the interactions that appear to be “real”, a MR experience.

Clinical case

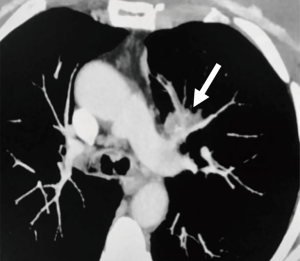

A 68-year-old female, smoker (40 packs/year) had mild hemoptysis and an Angio-CT scan of the chest showed a 2.5 cm lesion at the hilum of the anterior segment of the left upper lobe with involvement of the first branch of the pulmonary artery (PA), very suspicious of being malignant (Figure 4). A brain MRI was normal as well as a bronchoscopy. The PET-scan showed that the lesion (Figure 5 arrowhead) had a SUVmax of 12.9 and showed a second small lesion with SUVmax of 2.5 in the same lobe (Figure 5 arrow) with no other lesions. Her PFTs showed severe COPD, and the predicted DLCO after pneumonectomy was 15%. We decided to perform a VATS left upper lobectomy with, probably, an arterioplasty at the level of the first branch of the PA. At the same time, we felt that this was an excellent case to do the 3D planning, for the first time, with the new tool that we participate as consultant (Leonardo Frajhof, Jorge Lopes and Rui Haddad) with the developers (João Borges, Elias Hoffmann).

The Angio-CT was processed in the M3DESK resident program, the images were segmented in separated parts (chest wall, lungs, heart and vessels, tracheobronchial tree, pulmonary arteries and veins) and these images were sent to the cloud, painted and reconstructed in the app (M3DMIX), as we can see in Figures 6A and 6B.

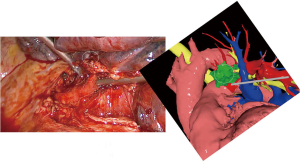

In Figure 6A, 3D model showing the tumor (green), heart and great vessels (pink), tracheobronchial tree (TBT) (yellow), lungs (gray), chest wall (orange), pulmonary artery (red), and pulmonary vein (blue) and in Figure 6B, TBT, vascular structures and tumor after extracting lungs and chest wall by two simple clicks in the smart phone app. In Figure 6C, Examples of the rotation of the model to see the relations of the tumor (in green) with the first branch of the left PA (in red) helping to make the planning of the resection. The neck of the artery is clearly seen in the 3rd picture.

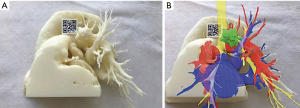

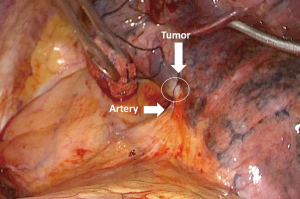

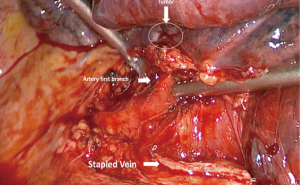

The patient was then brought to the OR, prepped and draped in the usual fashion for a VATS lobectomy and a three incisions technique starting from the hilum was done (Figure 7). The chest cavity was inspected and no other lesions were found. The tumor was at the same level that we saw in the 3D planning (Figure 8). We opened the mediastinal pleura, stapled the left upper lobe vein and it was possible to do a difficult and laborious dissection of the first branch of the PA (Figure 9) that was stapled and the surgery then was easy and fast. Figure 10 shows the comparison of the surgical field and the 3D model. The hilar lesion was a pulmonary adenocarcinoma and the second lesion was benign. A mediastinal node (station 5) was microscopically positive. The patient had an uneventful postoperative time and was discharged in the 4th PO day. Adjuvant chemotherapy was indicated. Figure 11 shows the 3D printed model with QR Code TAG (A) and 3D mixed reality as seen in the M3DMIX app (B). Figure 12 shows “magic cube” with QR Code TAG (A) and 3D augmented reality as seen in the M3DMIX app (B).

Conclusions

The 3D model was very accurate and helped planning and executing this operation. Display of patient’s 3D data through VR, MR and AR is a useful tool for surgical planning by providing the surgeon with a true and spatially accurate representation of the patient’s anatomy. We plan to use this tool as an adjuvant mean in all anatomic pulmonary resections.

Acknowledgments

Funding: None.

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jovs.2018.06.02). JB reports other (CEO) from M3DMIX, during the conduct of the study. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee(s) and with the Helsinki Declaration (as revised in 2013). Written informed consent was obtained from the patient for publication of this Brief Report and any accompanying images.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Milgram P, Kishno F. A taxonomy of mixed reality visual displays. IEICE Transactions on Information and Systems E series D 1994;77:1321-9.

- Microsoft. Mixed Reality. Available online: https://developer.microsoft.com/en-us/windows/mixed-reality/mixed_reality

- Frajhof L, Borges J, Hoffmann E, et al. 3D model as seen in the App (mobile phone or tablet). Asvide 2018;5:611. Available online: http://www.asvide.com/article/view/25824

- Lorensen WE, Cline HE. Marching Cubes: A High-Resolution 3D Surface Construction Algorithm. Computer Graphics 1987;21:163-9. [Crossref]

- Frajhof L, Borges J, Hoffmann E, et al. Reconstructions that can be done in the desktop program. Asvide 2018;5:612. Available online: http://www.asvide.com/article/view/25825

- Frajhof L, Borges J, Hoffmann E, et al. The case: VATS left upper lobectomy. Asvide 2018;5:613. Available online: http://www.asvide.com/article/view/25826

Cite this article as: Frajhof L, Borges J, Hoffmann E, Lopes J, Haddad R. Virtual reality, mixed reality and augmented reality in surgical planning for video or robotically assisted thoracoscopic anatomic resections for treatment of lung cancer. J Vis Surg 2018;4:143.